I’ve been continuing with taking new exams as they come out. Having recently taken the MB-400 exam (see MB-400 Power Apps & Dynamics 365 Developer Exam), I was slightly surprised to see the announcement that it was going to be replaced!

Admittedly, I was also surprised (in a good way) that I passed the MB-400, not being a developer! It’s been quite amusing to tell people that I’m a certified Microsoft Dynamics Developer. It definitely puts a certain look on their faces, which always cracks me up.

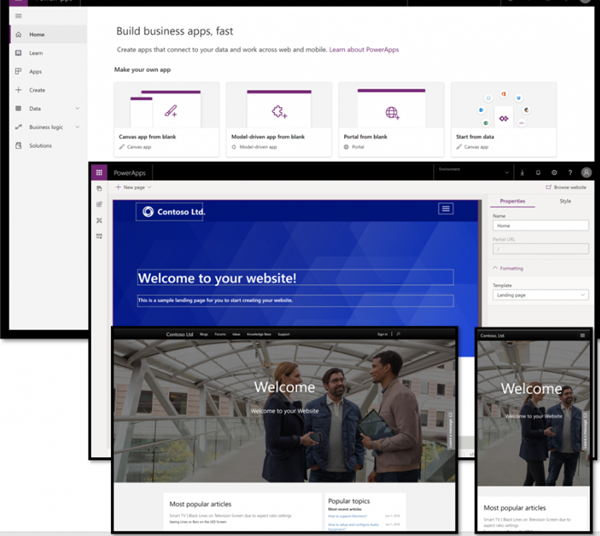

Then again, the general approach seems to be to move all of the ‘traditional’ Dynamics 365 exams to the new Power Platform (PL) format. This includes obviously re-doing the exams to be more Power Platform centric, covering the different parts of the platform than just the ‘first party apps’. It’s going to be interesting to see how this landscape extends & matures over time.

The learning path came out in the summer, and is located at https://docs.microsoft.com/en-us/learn/certifications/exams/pl-400. It’s actually quite good. There’s quite a lot that overlaps with the MB-400 exam material, as well as the information that’s recently been covered by Julian Sharp & Joe Griffin.

The official description of the exam is:

Candidates for this exam design, develop, secure, and troubleshoot Power Platform solutions. Candidates implement components of a solution, including application enhancements, custom user experience, system integrations, data conversions, custom process automation, and custom visualizations.

Candidates must have strong applied knowledge of Power Platform services, including in-depth understanding of capabilities, boundaries, and constraints. Candidates should have a basic understanding of DevOps practices for Power Platform.

Candidates should have development experience that includes Power Platform services, JavaScript, JSON, TypeScript, C#, HTML, .NET, Microsoft Azure, Microsoft 365, RESTful web services, ASP.NET, and Microsoft Power BI.

So the PL-400 was announced on the Wednesday of Ignite this year (at least in my timezone). Waking up to hear of the announcement, I went right ahead to book it! Unfortunately, there seemed to be some issues with the Pearson Vue booking system. It took around 12 hours to be sorted out, & I then managed to get it booked Wednesday evening, to take it Thursday.

So, as before, it’s not permitted to share any of the exam questions. This is in the rules/acceptance for taking the exam. I’ve therefore put an overview of the sorts of questions that came up during my exam. (Note: exams are composed from question banks, so there could be many things that weren’t included in my exam, but could be included for someone else!). It’s also in beta at the moment, which means that things can obviously change.

There were a few glitches during the actual exam. One or two questions with answers that didn’t make sense (eg line 30 does X, but the code sample finished at line 18), and question numbers that seemed to jump back & forth (first time it’s happened to me). I guess that I’ve gotten used to at least ONE glitch happening somewhere, so this was par for the course.

I’ve tried to group things as best together as I feel (in my recollection), to make it easier to revise.

- Model Apps.

- Charts. How they work, what drives them, what they need in order to actually work, configuring them

- Visualisation components for forms. What they are, examples of them, what each one does, when to use each one

- Custom ribbon buttons. What these are, different tools able to be used to create/set them up, troubleshooting them

- Entity alternate keys. What these are, when they should be used, how to set them up & configure them

- Business Process Flows. What these are, how they can be used across different scenarios, limitations of them

- Business Rules. What these are, how they can be used across different scenarios, limitations of them

- Canvas apps

- Different code types, expressions, how to use them & when to use them

- Network connectivity, & how to handle this correctly within the app for data capture (this was an interesting one, which I’ve actually been looking at for a client project!)

- Power Apps solution checker. How to run it, how to handle issues identified in it

- Power Automates

- Connectors – what these are, how to use them, security around them, querying/returning results in the correct way

- Triggers. What is a trigger, how do they work, when to use/not use them

- Actions. What these are, how they can be used, examples of them

- Conditions. What these are, how to use them, types of conditions/expressions/data

- Timeouts. How to use them, when to use them, how to configure

- Power Virtual Agents. How to set them up, how to configure them, how to deploy them, how to connect them to other systems

- Power App Portals. Different types, how to set them up, how to configure them, how they can work with underlying data & users

- Solutions

- Managed, unmanaged, differences between them, how to use each one.

- Deploying solutions. Different methods that can be used to do it, best practise for each, when to use each one

- Package Deployer & how to use it correctly

- Security.

- All of the different security types within Dynamics 365/Power Platform. Roles/Teams/Environment/Field level. How to set up, configure, use in the right way.

- Hierarchy security

- Wider platform security. How to use Azure Active Directory for authentication methods, what to know around this, how to set it up correctly to interact with CDS/Dynamics 365

- What authentication methods are allowed, when/how they can be used, how to configure them

- ‘Development type stuff’

- API’s. The different API’s that can be used, methods that are valid with each one, the Organisation service

- Discovery URL’s. What these are, which ones are able to be used, how they’d be used/queried

- Plugins. How to set up, how to register, how to deploy. Steps needed for each

- Plugin debugging/troubleshooting. Synchronous vs asynchronous

- Component types. Actions/conditions/expressions/data operations. What these are, when each is used

- Custom ribbon buttons. What these are, different tools able to be used to create/set them up, troubleshooting them

- Javascript web resources. How to use these correctly, how to set them up on entities/forms/fields

- Powerapps Component Framework (PCF). What these are, how to develop them, how to use them in the right way

- System Design

- Entity relationship types. What they are, what each one does, how they work, when to use them appropriately. Tools that can be used to display them for system design purposes

- Storage considerations across different types, including CDS & Azure options

- Azure items

- Azure Consumption API. How to monitor, how to handle, how to change/update

- Azure Event Grid. What it is, the different ways in which it can be used, when each source should be used

- Dynamics 365 for Finance. Native functionality included in it

The biggest surprise that I had really when thinking back to things was the inclusion of Dynamics 365 for Finance in it. Generally the world is split into ‘front of house’ (being Dynamics 365/Power Platform), and ‘back of house’ (Dynamics 365 for Finance & Supply Chain Management). The two don’t really overlap, though they’re supposed to be coming more together over time. Being that this is going to happen, I guess it’s only natural that exam questions around each other will come up!

Overall it was quite a good exam. Some of the more ‘code-style’ questions were somewhat out of my comfort zone, and I’ll freely admit to guessing some of the answers around them! Time will tell, as they say, to see how I’ve done in it.

I hope that this is helpful for anyone who’s thinking of taking it – good luck, and please do drop a comment below to let me know how you found it!

–